The technology driving the automated vehicle revolution relies on the car's ability to see and understand the world around it.

Sensors act as the eyes and ears of the vehicle and collect the necessary information. There are several different ways in which different companies are making this happen. Some use visual information, like cameras, while others use radar and light detection to see and analyze the world.

Here's a quick overview of all the ways that driverless cars can see the world. Check out the full slides, from 2025AD, below for more info and detailed drawings of how these different sensors work.

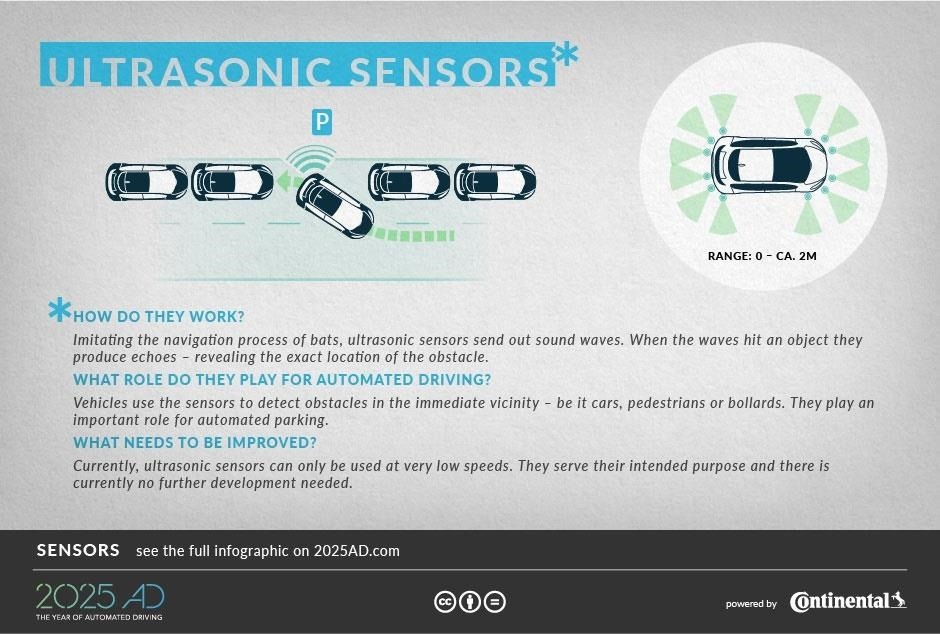

Ultrasonic Sensors

Ultrasonic sensors rely on sound to see the world around them. By measuring echoes produced when a sound wave hits an object, the sensors can tell how far away and object is. Since sound moves slower than light, these sensors are only useful at low speeds and are often used in concert with other technologies in autonomous vehicles.

For example, Tesla's Model S sedan uses 12 long-range ultrasonic sensors which provide 360-degree vision that's used to back up the data from their other sensors. This allows the car to drive on autopilot, which Tesla says is "safer and less stressful highway driving."

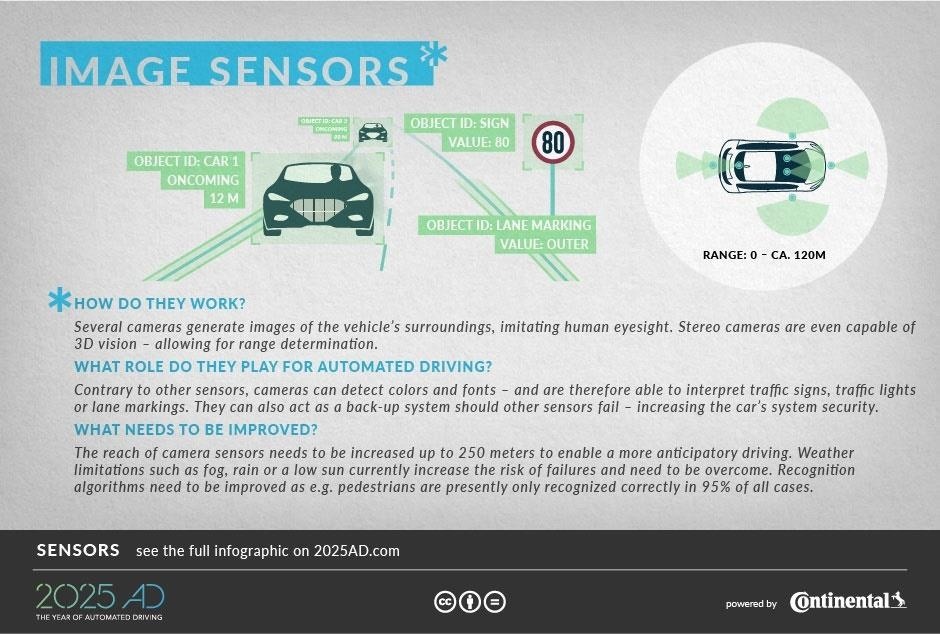

Image Sensors

You might think that cameras, which imitate eyes and even allow for 3D vision, would be the best way to scan a car's surrounding, but the reach of these sensors just isn't there. While cameras can see color and font, their effectiveness is hampered by weather conditions, and image-recognition algorithms still need some work.

In the past, Elon Musk, CEO of Tesla, has said that self-driving can be obtained through this kind of passive camera work, passing up on more sensitive technology like laser range detection. In a 2015 press conference, he noted:

I don't think you need LIDAR. I think you can do this all with passive optical and then with maybe one forward RADAR… if you are driving fast into rain or snow or dust. I think that completely solves it without the use of LIDAR. I'm not a big fan of LIDAR, I don't think it makes sense in this context.

We do use LIDAR for our dragon spacecraft when docking with the space station. And I think it makes sense in that case and we've put a lot of effort into developing that. So it's not that I don't like LIDAR in general, I just don't think it makes sense in a car context. I think it's unnecessary.

Companies like Mobileye, recently purchased by Intel for $15 billion dollars, have been using visual camera technologies like these to make driver assistant technology such as smart cruise control, adaptive breaking, and automatic lane detection for years.

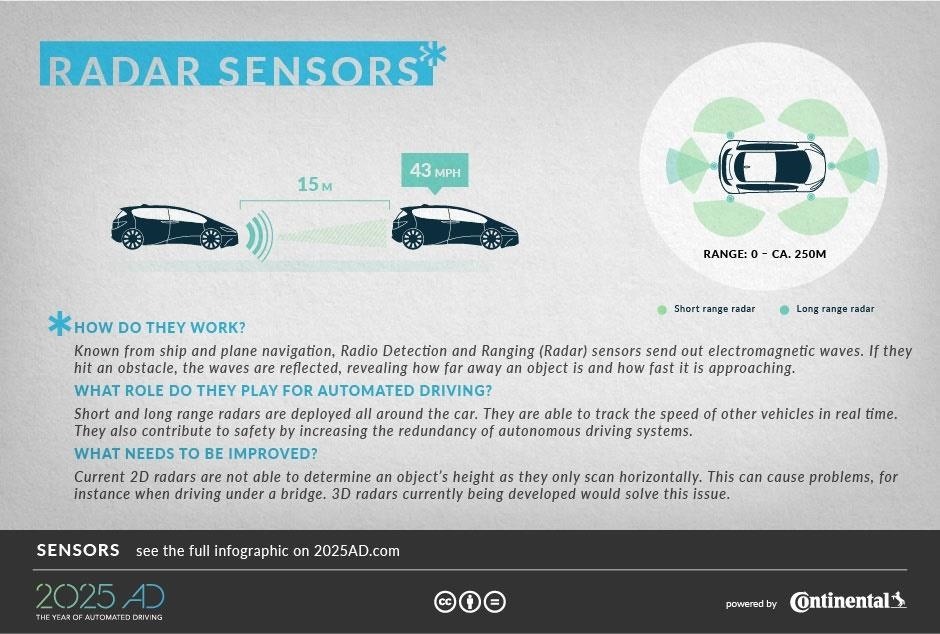

Radar Sensors

Like ships and planes, driverless cars can use radar to see the world around them, even into some corners visuals couldn't penetrate. Radars send out electromagnetic waves (including those in the microwave spectrum), which are reflected back if they hit an obstacle, telling the car how far away it is.

Radar comes in two types: Short-range radars detect objects in the vicinity of a car (~30 meters) at low speeds while long-range radars cover relatively longer distances (~200 meters) and can work at high speeds. They're important in both development of Level 4 and 5 driverless cars, but also in advanced driver assistance systems.

This technology is useful for tracking other vehicles, but 2D radar can't tell a vehicles height as they only scan horizontally. This can certainly pose a problem when it comes to navigating a bridge! Thankfully, 3D Radars are being developed.

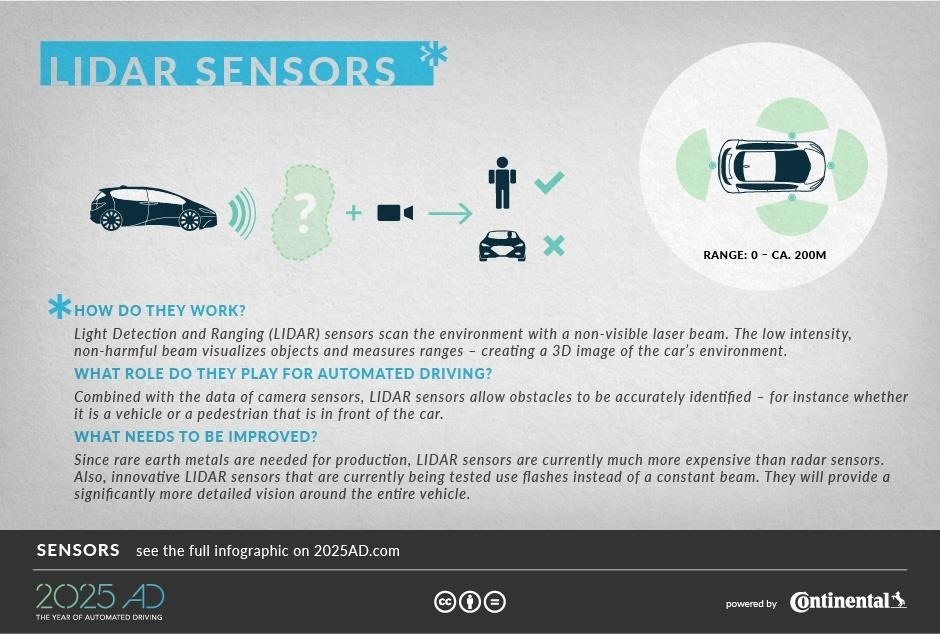

LIDAR Sensors

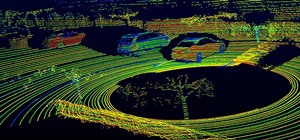

Light detection and ranging (LIDAR) sensors scan surroundings with an indivisible laser beam, creating a 3D image that is very useful for navigation.

Many companies currently chasing the driverless dream are using LIDAR, though the equipment to perform this scanning is out of reach for a consumer vehicle, since they require rare earth metals and are vastly more expensive to produce than radar.

Flashing light is being tested in lieu of a constant beam which will hopefully provide a more detailed vision around the car, and companies like Quanergy are working to produce lower-cost LIDAR sensors.

Speaking about the new technology, Louay Eldada, CEO of Quanergy Systems, Inc., said in a press release that:

There is high customer demand for lower cost, more reliable high-end sensor systems. The S3 is the only product that can satisfy this demand and deliver true solid state 3D sensing at a reasonable price. We're in a unique position to finally bring this technology to market, and enable amazing innovation in self-driving and machine vision applications.

Waymo's self-driving cars currently use LIDAR sensors, which Google is reportedly making themselves in an effort to decrease costs.

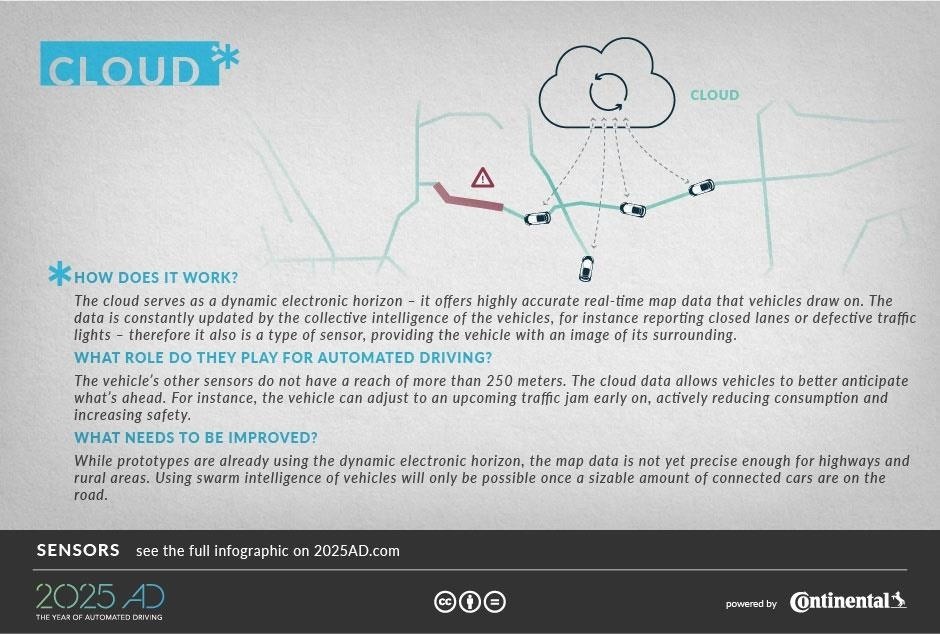

The Cloud

Lastly, the Cloud provides real-time map data that is constantly updated thanks to a collective intelligence shared between multiple cars along a given road. As a result, driverless cars can know what's ahead of it before it reaches an obstacle like a traffic jam, lane change, or construction.

The data is still in the works and isn't precise enough as of yet for rural areas and highways. Indeed, swarm intelligence is only possible when there are a significant number of connected vehicles on the road. And even then, it could only tell you about road conditions in front of another car, not information about objects that jump out in front of the car, which is needed for higher levels of autonomy.

For many driverless cars, these sensors are used in concert, often mixing and matching technologies to get the most information possible.

Just updated your iPhone? You'll find new emoji, enhanced security, podcast transcripts, Apple Cash virtual numbers, and other useful features. There are even new additions hidden within Safari. Find out what's new and changed on your iPhone with the iOS 17.4 update.

Be the First to Comment

Share Your Thoughts