A vulnerability in the design of LiDAR components in driverless cars is far worse than anything we've seen yet outside of the CAN bus sphere — with a potentially deadly consequence if exploited.

The hack is also going to be hard to fix, researchers Hocheol Shin, Dohyun Kim, Yujin Kwon, and Yongdae Kim, from the Korea Advanced Institute of Science and Technology, wrote in a paper for a study supported by Hyundai.

The researchers described how they engineered more potentially lethal and stealthier attacks for driverless car LiDAR sensors compared to previous similar attack vectors. These exploits both spoof LiDAR beams and cause denial-of-service attacks with signal saturation. The paper, called "Illusion and Dazzle: Adversarial Optical Channel Exploits against LiDARs for Automotive Applications," was published by the International Association for Cryptologic Research.

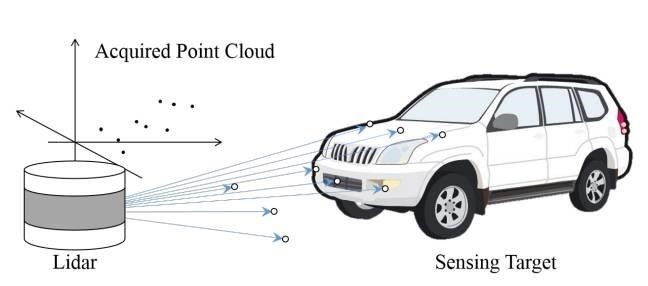

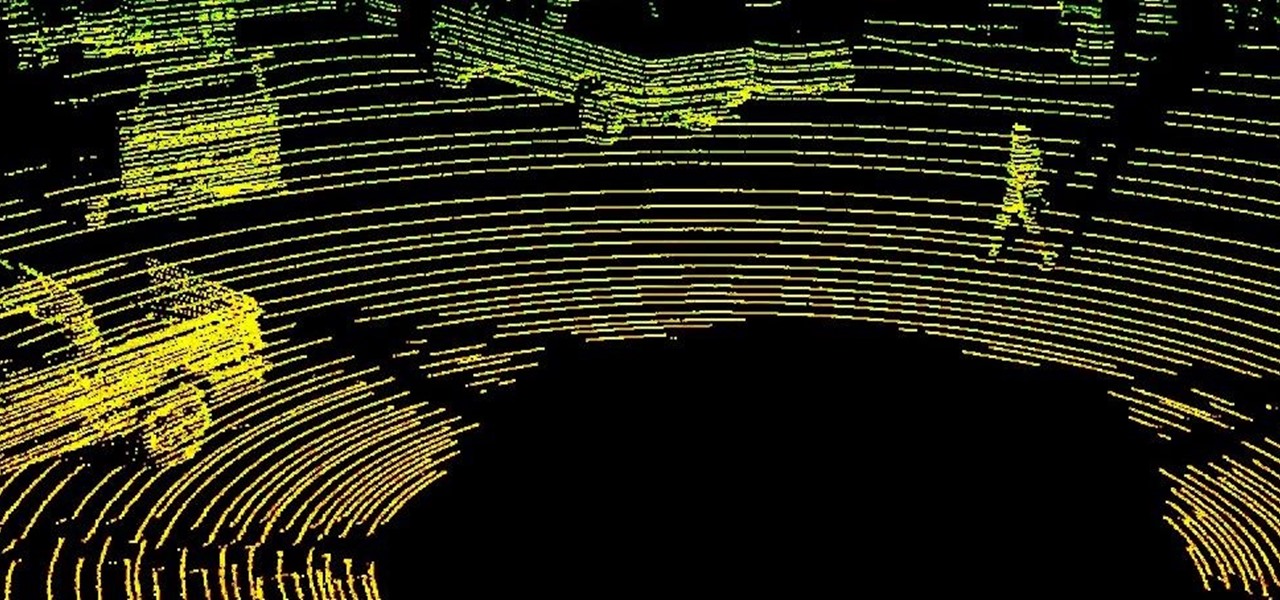

The Korean researchers' hack exploits how LiDAR beam signals create dots in a cloud-like area that the machine-taught onboard computer detects in real time. The artificial intelligence (AI) then decides if it needs to change the car's steering, braking, or acceleration to react to the object while driving the vehicle.

For the spoofing attack, the researchers created fake dots representing objects on the road that can appear very close to a moving car. As the car's LiDAR detects these dots, the car's AI perceives it as an immediate impact and takes a calculated risk, locking the brakes to crash stop in a ditch or gully on the side of the road. Suddenly braking to a stop on a busy freeway in bumper-to-bumper traffic, for example, is one scenario that can have deadly consequences.

Because the braking distance is the distance required solely for braking, even autonomous vehicles have no room for checking the authenticity of the observed dots, but need to immediately activate emergency braking or evasive [maneuvers]. Such sudden actions are sufficient to endanger the surrounding vehicles.

In the saturation attack, the researchers sent light signals to the LiDAR component, causing the device to become "blind." The LiDAR, which is the target receiver, becomes saturated with light beams from the device sending the light signal. The most obvious risk the attack causes is that the LiDAR can no longer recognize objects that could potentially cause harm upon impacts, such as a stopped car, road debris, or a pedestrian in the car's path.

The researchers were able to exploit the refractive angles of light signals sent to the LiDAR device, which was a Velodyne LiDAR (although devices from Ibeo and Quanergy are also vulnerable, the researchers said). The person sending the signal to hack the LiDAR embedded in the car is thus able to remain at angles to the side of the car, instead of being directly in front of the LiDAR.

With the previous LiDAR attack vectors, the signal had to be transmitted at a perpendicular angle to the LiDAR. More importantly, the spoofed object could not be farther away in distance from the light beam emitter in previous exploits — this means that the device used to create a fake object had to be in front of the LiDAR and at the same distance from the car as the spoofed object.

It's All Good, People

Papers like this one are obviously controversial and are often met with hostility. In one incident, Volkswagen went to great lengths to keep a hack secret that could be used to steal cars and went to court to try to stop it from being published.

But in the spirit of white hat hacking, the idea is that by remaining transparent and exposing security flaws publicly, engineers will be able to fix them before they become a real problem. Also, since mass production of LiDAR components has yet to begin, the target is not nearly as attractive as exploiting vulnerabilities in controller area network (CAN) bus is.

In one case, a Chinese security firm was able to seize control of the braking, infotainment panel, door locks, and other systems on the Model S before Tesla patched the security hole with an OTA software update. This makes for a relatively attractive target since there are tens of thousands of Teslas in circulation, while Level 3 driverless cars at risk for LiDAR attacks have yet to be launched commercially (Tesla also does not have LiDAR components).

At the point, the publications of the LiDAR exploit serves to "raises the flag," Egil Juliussen, an analyst for IHS Automotive, told Driverless.

There's much more research going to into CAN bus exploits due to the sheer number of cars with the device on the road today. But in a few years, there will be enough driverless models on the road for LiDAR attacks to become an issue.

The LiDAR attacks also lack scope. While unpleasant to think about, a sniper with a long-range rifle or someone tossing heavy objects from a bridge over a busy freeway poses more immediate risks than the LiDAR attack does, which the Korean researchers describe. Beyond causing harm and mayhem, there are few possible motives for the attack.

"For somebody to do this LiDAR attack, it is very specific," says Juliussen. "There's not really much financial incentive since it does not involve mass exploits — unless it is for murder for hire."

The Hard Big Fix

Meanwhile, the Korean researchers say the LiDAR vulnerability can be fixed with difficulty. Potential "mitigative approaches" exist, while they are "either technically/economically infeasible or are not definitive solutions to the presented attacks."

We do not advocate the complete abandonment of the transition toward autonomous driving, because we believe that its advantages can outweigh the disadvantages, if realistic adversarial scenarios are appropriately mitigated. However, such considerations are currently absent; therefore, automakers and device manufacturers need to start considering these future threats before too late.

Indeed, while the number of people with an advanced understanding of physics and lightwave properties that are required to initiate a LiDAR attack is limited, it will be interesting to see how the industry reacts to the exploit. At the upcoming DEF CON hacking conference later this month in Las Vegas, it is very likely that the LiDAR exploit will be just one of many to follow that target driverless cars.

Just updated your iPhone? You'll find new emoji, enhanced security, podcast transcripts, Apple Cash virtual numbers, and other useful features. There are even new additions hidden within Safari. Find out what's new and changed on your iPhone with the iOS 17.4 update.

3 Comments

This is why we have police, courts and prisons. I believe this would qualify as attempted manslaughter or some other very serious crime.

If someone were to use this hack to cause harm, then I would say your assumption is correct.

it sounds like a variation of blind-folding the Lidar device.

the device should be capable of self diagnostics and report the result to the central control unit for AD. this unit then would be in need to take the appropiate measures which can normally range up (but not limited to) to a controlled stop operation of the vehicle based upon all still available other sensors and the last valid Lidar states.

its sort of sabotage resulting in an unexpected/undesired state of operation - but its the duty of the vehicle system to even handle this. if not then its no longer save driving and the system should never have received a public road approval. so the public authorities are in duty as well for regulations and testing requests after now knowing of this sort of danger. its possible to handle even this - but it might introduce some extra requirements (with related costs of implementation per design and per unit) - but its probably unavoidably needed for safety reasons.

Share Your Thoughts